The robots.txt file is the main method we have to communicate with the search engines, to tell them where they can access our website and where they can’t.

most search engines only know how to read the basic directives within the robots.txt file, but there are search engines that know how to read other directives as well.

In the following guide we will explain, present, and demonstrate how a robots.txt file should look, what elements constitute it, and how to upload it to our site.

What is a robots.txt file?

A robots.txt file is a file that is read by the search engines’ spiders / crawlers. These crawlers are also called “robots”, hence the name of the file.

The file acts as a kind of entry instructions to our site for these robots.

Before they enter the site, they scan the robots.txt file and get the information of which pages they are allowed to go into and which ones they aren’t.

Therefore, it is very important to make sure that the robots.txt file is written in a correct and exact way.

There is no margin for error here.

Where is the robots.txt file located?

The robots file should be in the main parent folder of your domain.

Meaning, if for example our website is: https://www.a-designer.co.il, the address for the robots file should be this: https://www.a-designer.co.il/robots.txt.

- The robots.txt file must be called “robots.txt” and be written in lowercase letters (the crawlers are case-sensitive).

Pros and cons of using a robots.txt file

Pro: Control of the crawl budget

The crawl budget is the amount of time the crawler will spend scanning your site or the number of pages the crawler will scan in your site.

This means that every time a crawler enters your site, they have a predetermined budget, as a result of which the crawler will not scan your whole site every time.

The significance of the crawl budget is this: we must manage our site’s crawl budget wisely, and not squander it.

The robots.txt file is one of the main ways we have to control our site’s crawl budget, as we instruct the crawlers where to go in and where not to.

Con: There is no way to remove pages from the search results once they are there

While the file does instruct the crawlers where to enter and where not to, it can’t tell the search engine not to index (meaning to present) a specific page or URL.

Therefore, if you want to remove a certain page from the Google search results, you need to use a different method that is called meta robots and give the page a NOINDEX tag.

Con: Doesn’t allow for distribution of power pages

If you have designated a page as not being included in the crawl budget, the power of the page will not be distributed to other pages that are included in the crawl budget.

Robots.txt syntax

The robots file consists of lines of text that include your site’s pathways and “user-agent”.

In simpler terms, each time we define which addresses in the site we are referring to, and which search engine’s crawlers we are trying to instruct.

Here is the syntax in the file:

- The word “disallow” – where the crawler is blocked from entering. Leaving the “disallow” line empty means the crawler can enter anywhere in the site.

- The word “allow” – where the crawler is allowed to enter.

- The character “*” means “everyone and everything” – every file, every search engine, every crawler, and every text.

- The term “user-agent” – for which search engine the command is relevant.

For example:

User-agent: googlebot

Disallow: /Photo

These 2 lines tell googlebot that it can’t read the “Photo” folder or file.

Another example:

User-agent: googlebot

Disallow: /Photo/

These 2 lines tell googlebot that it can’t read anything that comes after the pathway “/Photo” (all files that are in the folder, etc.).

- Bear in mind that the lines of code are case-sensitive, and as such in this case for example, the crawlers would be able to enter a folder called “photo” (as opposed to “Photo”).

List of crawlers belonging to the various search engines

| Search Engine | Area | Crawler name |

| Baidu | General | baiduspider |

| Baidu | Images | baiduspider-image |

| Baidu | Mobile | baiduspider-mobile |

| Baidu | News | baiduspider-news |

| Baidu | Videos | baiduspider-video |

| Bing | General | bingbot |

| Bing | General | msnbot |

| Bing | Videos and Images | msnbot-media |

| Bing | Ads | adidxbot |

| General | Googlebot | |

| Images | Googlebot-Image | |

| Mobile | Googlebot-Mobile | |

| News | Googlebot-News | |

| Videos | Googlebot-Video | |

| AdSense | Mediapartners-Google | |

| AdWords | AdsBot-Google | |

| Yahoo! | General | slurp |

| Yandex | General | yandex |

How a complete robots.txt file should look

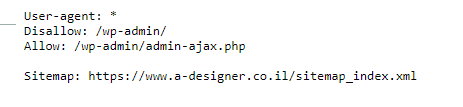

Let us take the site we have been using for examples: www.a-designer.co.il.

Let us go to this URL: https://www.a-designer.co.il/robots.txt

We get the following:

This is what most WordPress sites should show (unless there is something specific that needs changing).

What the lines of code mean

We are addressing all the crawlers and telling them not to go into WP-ADMIN (the management panel) with the exception of admin-ajax.php.

In the last line you can see our “site map”, which we will be addressing in a different article.

How to create a robots.txt file

In order to create a robots.txt file you have a few options. The 2 most familiar and easy options are:

- Using SEO plugins – Where your site is based on WordPress, WIX, Joomla or any other system, you can install a SEO plugin that includes a robots file creation feature. In WordPress for example both YOAST SEO and RANK MATH will create a quality robots.txt file for you with one click. It is important to verify after the installation of the plug-in that a robots file has actually been created.

- Creating a robots.txt file manually – It’s easier than you think. In order to manually create the file you need to open a simple TXT file in your computer, put in the relevant lines, and then connect to the file manager in your server and upload the file. We will give an example for this later.

How to create a robots.txt file using the plug-in YOAST SEO

If you have a WordPress site, install the SEO plug-in YOAST.

After the installation, check whether the file has been created automatically.

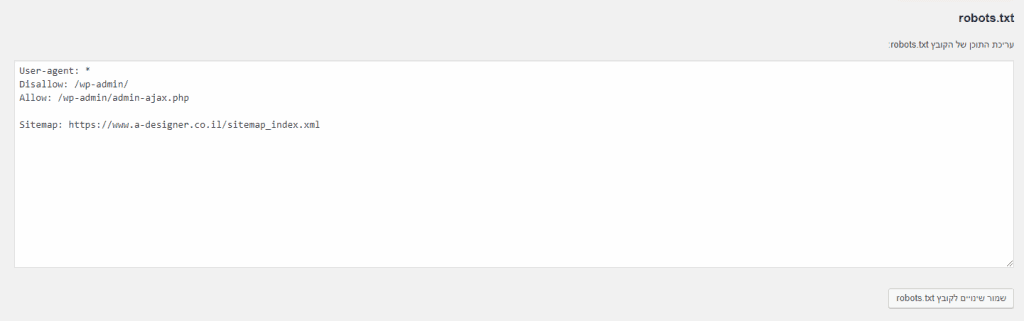

If not, in the plugin, go into tools > file editing > the robots file will open for editing.

How to create a robots.txt file manually

- Open a new TXT file on your computer and call it “robots”.

- Copy the correct lines for your site to the file:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: (sitemap url)

- Save the file.

- Connect to the server (where the domain is hosted).

- Connect to your file manager (part of the storage server. You will be required to connect using an FTP account).

- In the main parent folder, make sure that there isn’t already a file called robots.txt. If there isn’t, upload the file that you have created into the folder.

- That’s it! You have a robots.txt file on the site (of course you should open https://www.a-designer.co.il/robots.txt and make sure that it works).

And there you go; you have a robots file.

Congratulations! You have learned everything you need about the robots.txt file.

Now it is time to move on to “Step 2: Connecting the website to Cloudflare“.